CyberEther Remote – Take Two

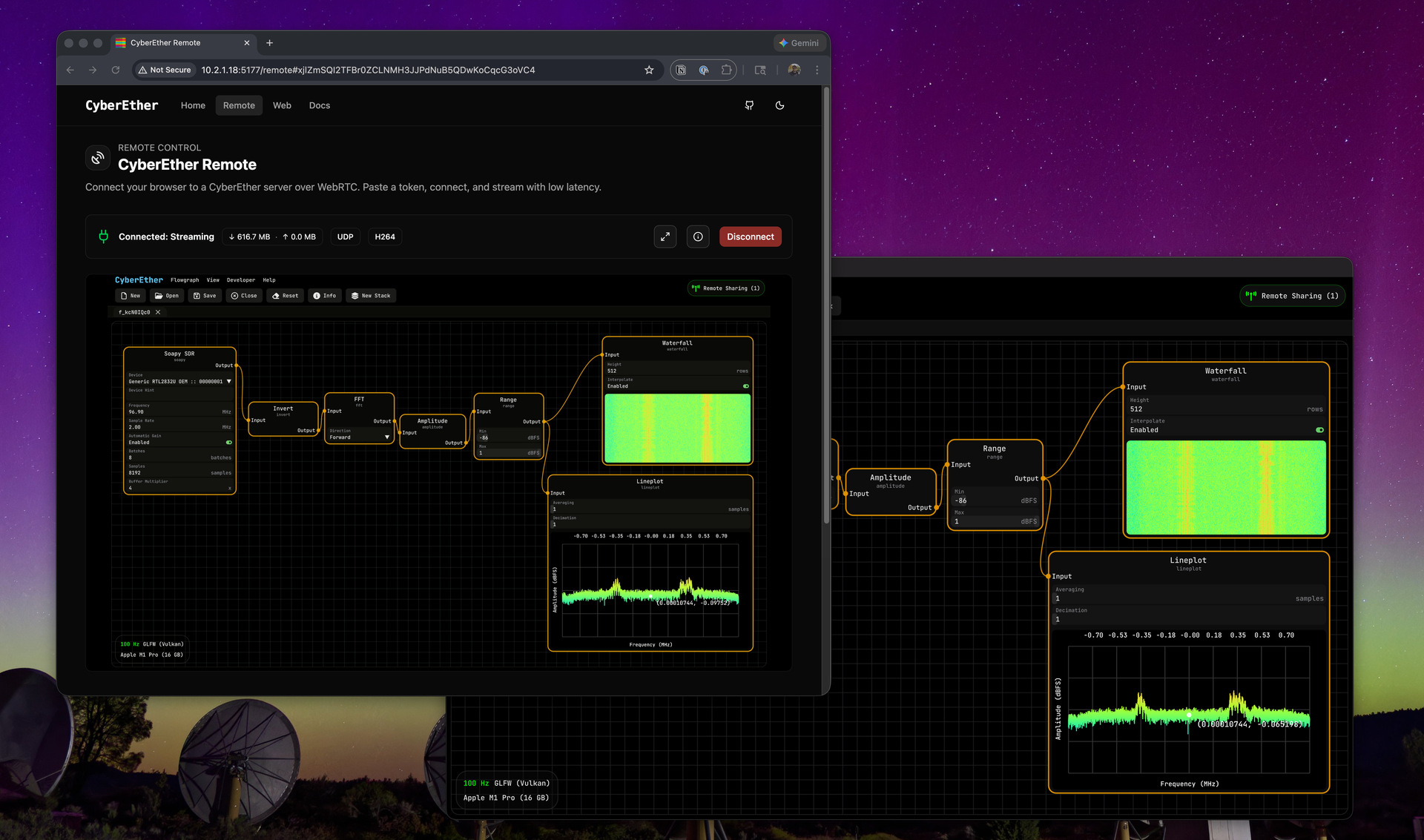

TL;DR: CyberEther Remote lets you run signal processing on a headless server close to your sensor, encode the rendered interface as video, and stream it anywhere with minimal latency. The new WebRTC-based implementation fixes the networking headaches of the original version, works in any browser, and doesn't require CyberEther on the client's side.

It's Always Memory

I created CyberEther to have a platform where I could test and deploy my digital signal processing algorithms. These included heterogeneous code such as CUDA for NVIDIA and Metal for Apple Silicon devices. One thing in common between all of them is that data locality matters a lot.

Many people porting their CPU code to discrete accelerators make the same age-old mistake: thinking you can accelerate just the hot part of your code on the GPU and leave the rest on the CPU as-is. This is a terrible idea, because every time you jump between compute units, you need to move a ton of data. This is particularly true with non-unified architectures where the accelerator doesn't share physical memory with the CPU. In the end, the main bottleneck of a compute pipeline isn't compute at all, it's memory.

The Remote Idea

What does this have to do with CyberEther Remote? Well, CyberEther was designed to work on the machine directly connected to the sensor. This sensor can range from a temperature sensor to a particle accelerator, but for simplicity, let's imagine a software-defined radio.

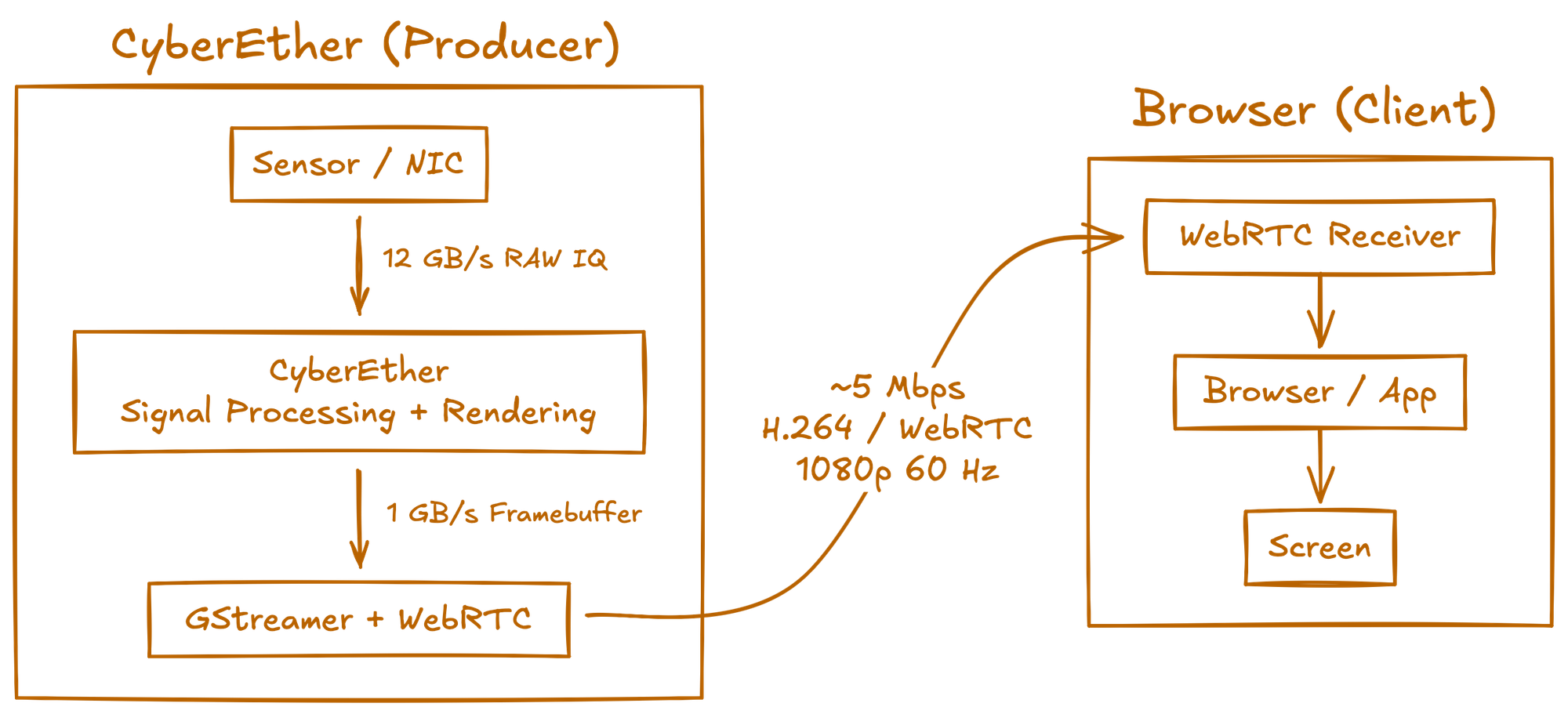

When you're streaming 100 MHz of raw I/Q from such a device, you're probably close to saturating USB 3.0 bandwidth, which is around 5 Gbps. This is a lot of data. For perspective, network cables typically carry only 1 Gbps, and you're lucky to get 100 Mbps upload on a normal internet connection.

The idea behind CyberEther Remote is that you receive this data locally, parse it, run your fancy signal processing, and render the result on screen with a waterfall, lineplot, or whatever visualization you need. Then you encode the rendered frame with a very efficient video encoder like H.264 and send it over the network to where the user actually is. With this approach, instead of sending 5 Gbps of raw signal, you send just 5 Mbps of nearly lossless 1080p video with minimal latency.

Why Not VNC?

This idea isn't new. Many protocols can do this, with VNC being the most common. But VNC suffers from latency and quality problems. It also requires a valid window manager session.

This is why I decided to implement this myself within CyberEther. Since I control the render pipeline, I can easily tap into the graphical framebuffer, copy it to CPU memory, and encode it. In some cases, where you're rendering on an NVIDIA GPU, CyberEther can feed the Vulkan framebuffer directly to the integrated video encoder present in almost all NVIDIA GPUs (NVENC) and get an encoded stream without passing a single byte of data through the CPU. This approach also improves latency significantly.

What is better than one CyberEther? Two CyberEther!

— Luigi Cruz (@luigifcruz) October 27, 2023

My latest "in and out 20 minutes" is a remote client. This renders the headless instance on a server, encodes the frame via a codec, and transmits the buffer to a remote client over UDP. It also supports mouse commands. pic.twitter.com/NG0ztcW1HS

Why Take Two?

So why "Take Two"? This feature has actually been in CyberEther since 2023. I used it extensively at my job at the Allen Telescope Array to test our new signal processing pipeline. I hosted CyberEther inside a Docker container running on a headless server in Hat Creek (the Allen Telescope Array location) and streamed it in real time all the way to another continent. I used it like a local instance while processing 90 Gbps of radio data.

Although the first version could stream high-resolution video with low latency, it had several problems:

- It used purely UDP to send video data from producer to consumer, which can be problematic in many network configurations.

- It required a separate TCP connection to send telemetry, including encoding information and mouse/keyboard events.

- It required the consumer to also have a CyberEther instance running to decode the transmission, making the barrier to entry higher.

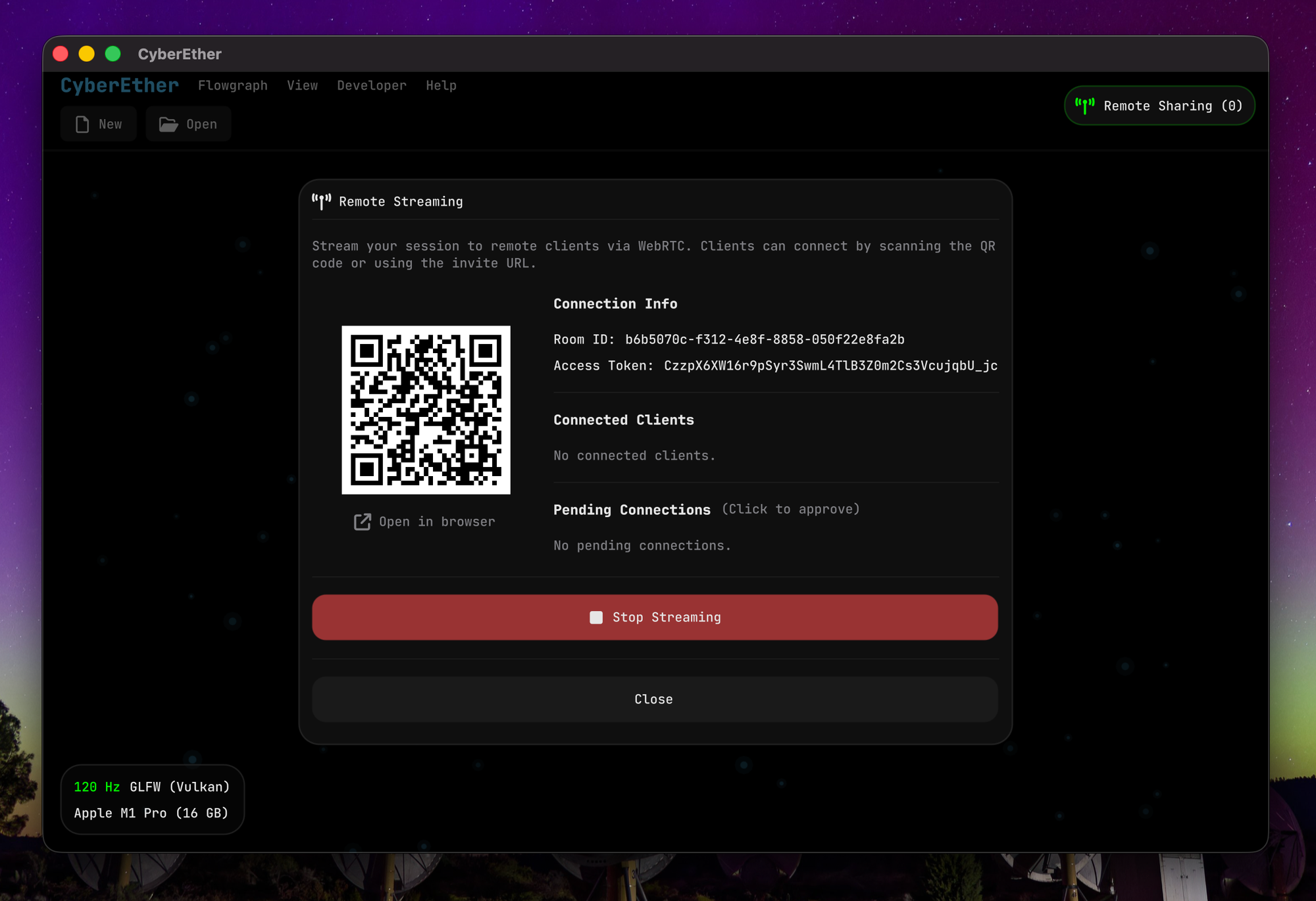

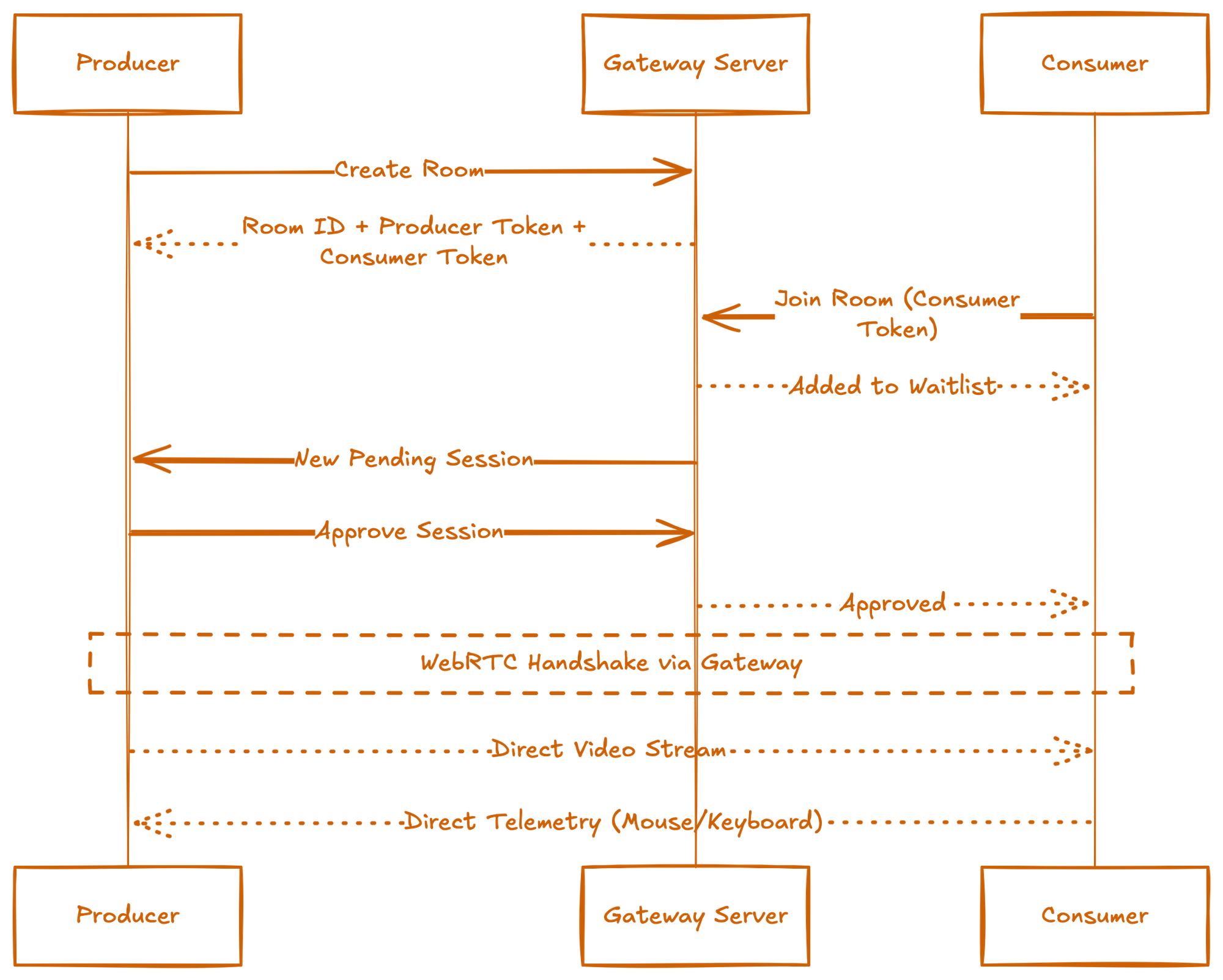

The new version solves all of these problems by using WebRTC for video and telemetry transport. WebRTC implements the handshake and NAT traversal necessary for peer communication, so I don't need to reinvent the wheel. In the future, I also want to use the data side-channel to send low-bandwidth data between peers.

Other improvements include:

- Support for multiple consumers.

- Browser support for decoding, including mobile browsers.

- Support for broadcasting over the internet (not currently implemented, but planned).

It's not all roses, though. Currently, just the Vulkan backend is supported for remote streaming but the remote interface is API-agnostic, and I plan to add Metal support soon. This shouldn't be much of a problem because almost all of the platforms have Vulkan support. The main disadvantage is that WebRTC requires a central server to coordinate the initial handshake until direct data channels are established. To solve this, I'm planning to host a free-to-use gateway available via the CyberEther website. It will also host a viewer that will connect to any locally accessible CyberEther instance with the remote feature activated. I also plan to release a standalone Type Script library that manages the WebRTC connection and the user interaction events so users can create their own implementations.

How It Works

As you may know, CyberEther uses low-level graphics APIs such as Vulkan, Metal, and WebGPU to render the interface. This unshackles it from common limitations like large binary files and inability to squeeze the last drop of performance from the hardware.

In this context, it allows me to directly copy the framebuffer used to render the interface and encode it with standard GStreamer code. It also allows CyberEther to run on systems without a graphical interface (no GNOME, Plasma, etc) by creating headless surfaces directly on the graphics card. After GStreamer acquires the uncompressed interface frame, it uses standard software video encoders to create a video stream, then uses the default WebRTC sink to send the video to the consumer.

Telemetry such as mouse and keyboard events travels bidirectionally between consumer and producer using a data channel. On the consumer side, user interface events are encoded into a protocol and sent to the producer, where they're unpacked and injected into CyberEther's event queue.

The WebRTC process follows a room-based model with an approval workflow. When a client wants to establish a connection, it first requests the creation of a new room. The system generates a unique room identifier along with two authentication tokens: a producer token for the host/broadcaster and a consumer token for viewers. The response also provides the signaling server URL and client domain needed for the WebRTC handshake.

New connection requests are placed in a wait list, where they await approval from the producer. The producer, authenticated via their token, can view pending sessions, approve them to grant access, or list currently active sessions. The system enforces a maximum of 8 concurrent sessions per room to manage resources. The producer retains full control and can destroy the entire room when finished, disconnecting all participants. This approval-based architecture gives the host explicit control over who can join their WebRTC stream, rather than allowing open access.

What's Next

CyberEther Remote lets you run heavy signal processing on a headless server close to your sensor, encode the rendered interface as video, and stream it anywhere with minimal latency. The new WebRTC-based implementation fixes the networking headaches of the original version, works in any browser, and doesn't require CyberEther on the client side.

I'm just finishing up the implementation and making sure everything looks good on the endpoint before launching CyberEther Remote publicly in Version 1.0, coming in the next couple of months!

No spam, no sharing to third party. Only you and me.

Member discussion